前言

之前在看VMX相关的东西的时候基本都是从比较抽象的文档入手,对于概念的理解还是比较模糊的。而且像kvm这种项目太大了,硬看下去会花很多时间在边边角角的点上。偶然看到Github上有个阿里云大佬开源了一个非常小巧的虚拟机实现—— Peach,虽然没有什么实际作用(指VM Monitor特别简单,而且完全没有实现外围设备),但是可以让人迅速对Intel VMX技术有清晰的概念。作者还同时在自己的微信公众号发布了讲解如何实现该实例的文章,但是99块的门槛有点夸张了😂。读完源码后我Fork了一份,并在关键代码都加了注释 放在这。本文行文比较仓促,可能错误有点多,一切解释以 Intel® 64 and IA-32 Architectures Software Developer's Manual Volume 3C: System Programming Guide, Part 3 手册为准。

基本概念

还是简单从抽象层面了解下使用了VMX技术的虚拟机是如何工作的。这部分放在前面,不想看代码的看完这部分就可以溜了。

架构

先借用《QEMU/KVM源码解析与应用》中的一幅图:

这幅图详细描述的QEMU-KVM模型的协作关系,比较复杂。而Peach VM的实现方式与该模型类似,但是少了很多东西,我们可以进行简化处理,只看VMX相关的部分。

工作关系

图中蓝色部分表示虚拟机的软件实现,由用户态程序(如qemu-system)和内核模块(如kvm)两部分组成,分别工作在ring3和ring0。两部分之间的通信通过Linux的文件操作接口完成,如open, ioctl等。灰色部分为宿主机(Host)的操作系统和应用软件。橙色和黄色部分为虚拟机(Guest)的操作系统和应用软件,它们的整体运行在一个虚拟化环境中,从他们视角上看和正常的操作系统并没有区别。紫色部分为VMXON Region和VMCS Region,其中VMXON Region在VMX操作模式开启后将一直存在,而VMCS Region则与创建的虚拟机实例有关,负责保存虚拟机运行期间Host和Guest的上下文信息。

这里有个奇怪的点,那就是为什么要同时保存Host和Guest的上下文信息?朴素思维下,实现一个虚拟机通常只需要关注虚拟机状态的维护即可。但是仔细观察可以发现Host和Guest的工作环境被区分成了root和non-root模式,所有的客户机都运行在non-root模式下运行,并且这两种模式的切换由VM Exit和VM Entry接口完成。顾名思义这两个接口的主要功能就是将执行流在虚拟化环境和宿主机环境中来回切换。由于VMX直接使用了逻辑CPU模拟出vCPU去运行虚拟机上的代码,所以不存在软件层面的指令转译,这就意味着无论是从Host切换到Guest还是从Guest切换到Host,都需要保存当前的上下文,以便执行流的恢复。

还有一个傻瓜问题,我姑且自问自答一下:问什么虚拟机跑起来之后需要频繁调用VM Exit?这个原因说简单也简单,说复杂了那就要从微机原理开始扯了(x。虚拟机运行期间少不了很多的硬件IO访问操作,或者调用VMCALL指令,或者调用了HLT指令,或者产生了一个page fault,又或者访问了特殊设备的寄存器等等,这其中IO操作是最频繁的。这些操作无法被VMX本身处理,需要交还执行流到VM Monitor中,然后由VM Monitor选择一个处理方案:

- 直接忽略,跳过该指令并调用

VM Entry - 在Host的内核模块中处理,处理完后同样

VM Entry - 返回到用户态程序中(如

qemu-system),由用户态程序处理。这种情况比较常见,因为大部分的虚拟设备(如RAM,PCI Bus及相关设备,ISA Bus及相关设备,南北桥,VGA设备等等)都被实现在用户态中,这么做也是便于开发和移植。但是在Peach VM中省略了这些内容,如果想了解的话之后可以单独做个 Qemu设备虚拟化 相关的专题。 - 直接结束Guest虚拟机的运行

QEMU 模拟的 Intel 440FX 框架

MSR Register

MSR(Model Specific Register)是x86架构中的概念,指的是在x86架构处理器中,一系列用于控制CPU运行、功能开关、调试、跟踪程序执行、监测CPU性能等方面的寄存器。每个MSR寄存器都会有一个相应的ID,即MSR Index,或者也叫作MSR寄存器索引,当执行RDMSR或者WRMSR指令的时候,只要提供MSR Index就能让CPU知道目标MSR寄存器。这些MSR寄存器的索引(MSR Index)、名字及其各个数据区域的定义可以在Intel x86架构手册”Intel 64 and IA-32 Architectures Software Developer's Manual"的Volume 4中找到。

之所以介绍这个概念是因为Peach VM的代码中有大量读MSR寄存器来获取一些常量的汇编代码。

读MSR寄存器的指令是rdmsr,这条指令使用eax,edx,ecx作为参数,ecx用于保存MSR寄存器相关值的索引,而edx,eax分别保存结果的高32位和低32位。该指令必须在ring0权限或者实地址模式下执行;否则会触发#GP(0)异常。在ecx中指定一个保留的或者未实现的MSR地址也会引发异常。

Peach VM中一个从MSR中读取IA32_VMX_BASIC值的样例

ecx = 0x480; // 索引

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_BASIC = 0x%08x%08x\n", edx, eax);

VMXON Region

对于Intel x86处理器,在打开VMX(Virtual Machine Extension),即执行VMXON指令的时候需要提供一个4KB对齐的内存区间,称作VMXON Region,该区域的物理地址作为vmxon指令的操作数。该内存区间用于支持逻辑CPU的VMX功能,该区域在VMXON和VMXOFF之间一直都会被VMX硬件所使用。

对于每个支持VMX功能的逻辑CPU而言,都需要一个相应的VMXON Region。Peach VM为了避免多CPU带来的的麻烦,在初始化时绑定到了其中一个CPU上。

VMCS Region

这是事关虚拟机运行最为重要的一个对象,Peach VM的内核模块部分大部分(几百行)的代码都在操作VMCS对象,操作的方式主要是读(vmread)和写(vmwrite)。由于VMCS中有大量的Guest和Host状态,所以在运行前需要进行冗长的设置。

下图是VMCS Region的所有字段,大体上分为了GUEST STATE AREA和HOST STATE AREA两部分:

Peach VM中对VMCS Region读的代码:

// 读取VMCS中VM_EXIT_REASON域的值

vmcs_field = 0x00004402;

asm volatile (

"vmread %1, %0\n\t" //

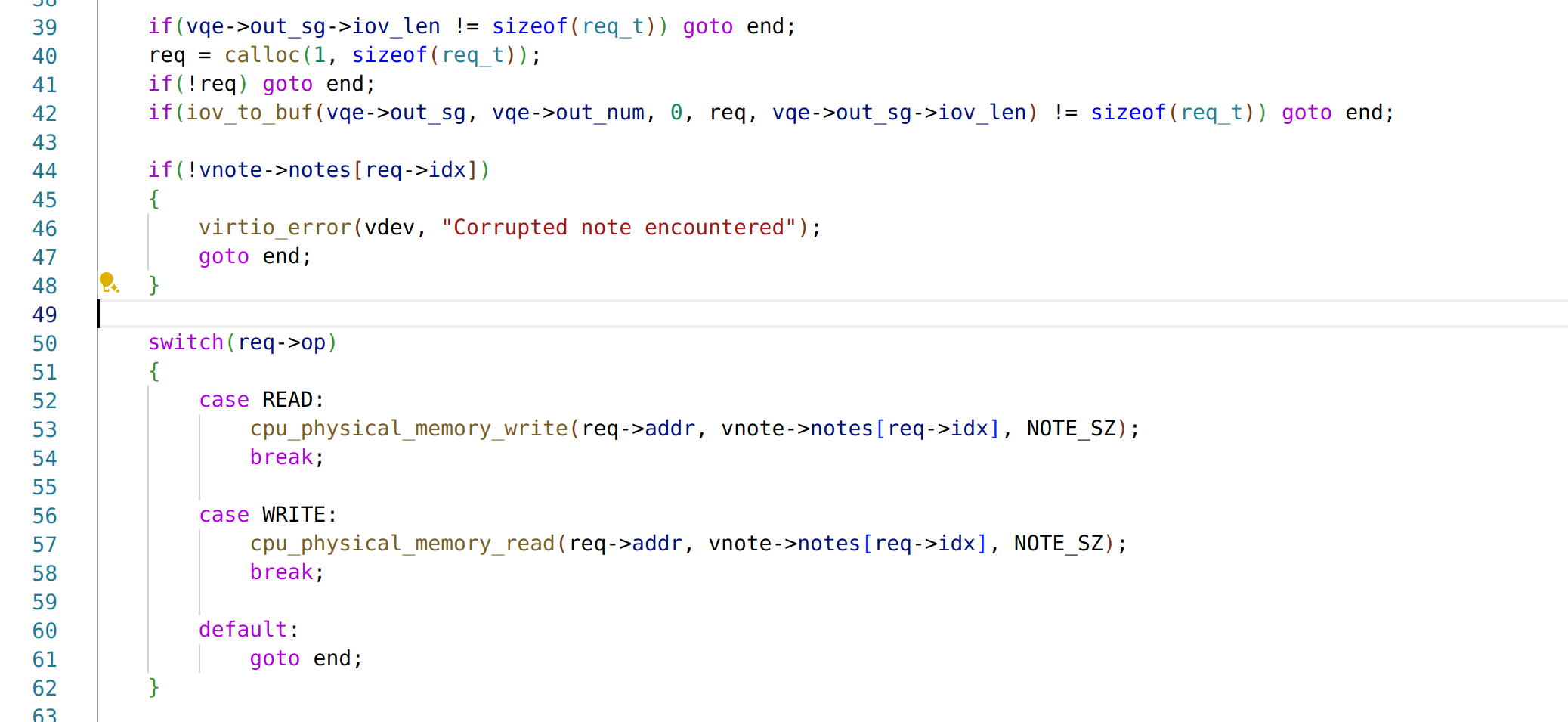

: "=r" (vmcs_field_value)

: "r" (vmcs_field)

);

printk("EXIT_REASON = 0x%llx\n", vmcs_field_value);

Peach VM中对VMCS Region写的代码:

// 写VMCS中Guest CS段选择子的值

vmcs_field = 0x00000802; // GUEST_STATE_AREA->CS->Selector

vmcs_field_value = 0x0000;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Guest CS selctor = 0x%llx\n", vmcs_field_value);

注意:VMXON Region和VMCS Region是不一样的两个内存区域,VMXON是针对逻辑CPU的,每个逻辑CPU都会有一份,并且在整个VMX功能使用期间硬件都会使用;而VMCS Region则是针对vCPU的,每个vCPU都会有一份VMCS Region,用于辅助硬件对vCPU的模拟。

技术

Intel EPT

在解释EPT(Extended Page Table)之前需要明白一个基本概念,在最初的设计中,虚拟机中的APP在进行访存的时候,实际上需要穿透三层地址空间——也就是需要进行三次地址转换:

- 客户机虚拟地址(GVA)到客户机物理地址(GPA)的转换——借助客户机页表(GPT)

- 虚拟机物理地址(GPA)到宿主机虚拟地址(HVA)的转换——借助类似

kvm_memory_slot的映射结构 - 宿主机虚拟地址(HVA)到宿主机物理地址(HPA)的转换——借助宿主机页表(HPT)

GVA -> GPA -> HVA -> HPA

影子页表

这样繁琐的转换方式效率比较低,于是首先出现了影子页表这种技术。影子页表简单来说就是,可以直接把客户机的虚拟地址(GVA)映射成宿主端的物理地址(HPA)。客户机想把客户机的页表基地址写入cr3寄存器的时候,由于读写cr3寄存器的指令都是特权指令,在读写 cr3的过程中都会陷入到VMM(之前说的VM Exit),VMM会首先截获到此指令:

- 在客户机写cr3寄存器的时候,VMM首先保存好写入的值,然后填入的是宿主机端针对客户机生成的一张页表(也就是影子页表)的基地址

- 当客户机读cr3值的时候,VMM会把之前保存的cr3的值返回给客户机

这样做的目的是,在客户机内核态中虽然有一张页表,但是客户机在访问内存的时候,虚拟机MMU机制不会走这张页表,MMU走的是以填入到cr3寄存器上的真实的值为基地址(这个值是VMM写的主机端的物理地址)的影子页表,经过影子页表找到宿主机的物理地址,最终实现了GVA直通HPA的转换。但是影子页表也有缺陷,需要对客户端的每一个进程维护一张表,后来出现了EPT页表。

GVA -> HPA

EPT

EPT 页表机制是一个四级的页表,与影子页表不同,EPT机制并不干扰客户机使用cr3完成GVA到GPA的转换,它主要的作用是直接完成GPA到HPA的转换。注意EPT本身由VMM维护,但其转换过程由硬件完成,所以其比影子页表有更高的效率。下面是EPT的工作方式:

GVA -> GPA -> HPA

EPTP -> PML4 Table -> EPT page-directory pointer Table -> EPT page-directory Table -> EPT Page Table -> Page

EPT表借助VMCS结构与客户机实例相关联,在VMCS Region中有一个EPTP的指针,其中的12-51位指向EPT页表的一级目录即PML4 Table。这样根据客户机物理地址的首个9位就可以定位一个PML4 entry,一个PML4 entry理论上可以控制512GB的区域。这对于一个简单的样例来说完全够用了,所以Peach VM只初始化了一个PML4表项和16个页。注意不管是32位客户机还是64位客户机,这里统一按照64位物理地址来寻址。

关于各级页表表项比特位的作用(权限位,索引位,保留位...),可以参考Intel手册,这里不再赘述。

关于地址转换的细节不用细究,只需要记得虚拟机运行前需要初始化的各级页表有那些即可

Intel VMX 指令集

完整内容依然建议参考前文的Intel手册,这里列出Peach VM会涉及到的(以及最常用的)部分指令,以便读者速查:

| 指令 | 作用 |

|---|

| VMPTRLD | 加载一个VMCS结构体指针作为当前操作对象 |

| VMPTRST | 保存当前VMCS结构体指针 |

| VMCLEAR | 清除当前VMCS结构体 |

| VMREAD | 读VMCS结构体指定域 |

| VMWRITE | 写VMCS结构体指定域 |

| VMCALL | 引发一个VMExit事件,返回到VMM |

| VMLAUNCH | 启动一个虚拟机 |

| VMRESUME | 从VMM返回到虚拟机继续运行 |

| VMXOFF | 退出VMX操作模式 |

| VMXON | 进入VMX操作模式 |

指令的使用细节会在代码分析一节指出

测试环境

随机,不用参考

宿主机

硬件平台:较新的 Intel CPU 都支持

操作系统:Windows 10/11

虚拟机软件:Vmware Workstation 16

相关设置:勾选Vmware客户机CPU的下面几个选项,以便支持嵌套虚拟化

虚拟机

操作系统:Ubuntu 20.04 LTS

编译样例:

git clone https://github.com/pandengyang/peachmake && cd module;makesudo ./mkdev.sh

启动用户态程序然后查看内核log:

cd ../ && ./peachsudo dmesg

代码分析

目录

目录结构比较简单,根目录的main.c是用户态程序,它会通过ioctl调用内核模块相关功能;module目录下是内核模块源代码,peach_intel.c完成虚拟机的初始化、客户机的创建&销毁。vmexit_handler.S完成VM Exit & VM Entry时的上下文保存和恢复工作;guest目录下是GuestOS的代码,由于不是分析的重点,直接忽略。

用户态部分

该部分的工作位置类似于qemu-system,如果有过使用/dev/kvm提供的接口来完成客户机创建的同学应该一眼就知道是在干嘛。

首先完成CPU的绑定,避免处理多核问题

if (-1 == sched_setaffinity(0, sizeof mask, &mask)) {

printf("failed to set affinity\n");

goto err0;

}

拿到Peach VM设备的fd,该fd相当于一个handle,是下面一切操作的作用对象

if ((peach_fd = open("/dev/peach", O_RDWR)) < 0) {

printf("failed to open Peach device\n");

goto err0;

}

客户机创建前的环境检查

if ((ret = ioctl(peach_fd, PEACH_PROBE)) < 0) {

printf("failed to exec ioctl PEACH_PROBE\n");

goto err1;

}

此处ioctl的指令为PEACH_PROBE

创建客户机,启动,并等待其运行完毕

if ((ret = ioctl(peach_fd, PEACH_RUN)) < 0) {

printf("failed to exec ioctl PEACH_RUN\n");

goto err1;

}

此处ioctl的指令为PEACH_RUN

可以发现Peach VM实在太精简了,以至于只提供了PEACH_PROBE和PEACH_RUN两个操作接口,所以下文对于内核模块的分析也是围绕PEACH_PROBE和PEACH_RUN展开。

内核模块

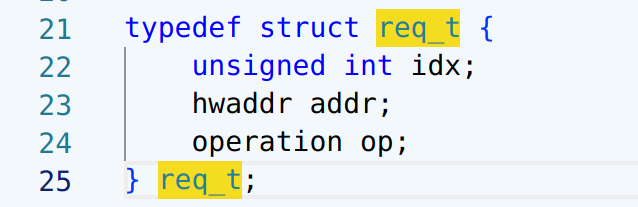

一些数据结构

struct vmcs_hdr {

u32 revision_id:31;

u32 shadow:1;

};

#define VMX_SIZE_MAX 4096

struct vmcs {

struct vmcs_hdr hdr;

u32 abort;

char data[VMX_SIZE_MAX - 8];

};

static struct vmcs *vmxon;

static struct vmcs *vmcs;

static u8 *stack;

#define GUEST_MEMORY_SIZE (0x1000 * 16)

static u8 *guest_memory; // guest内存指针

#define EPT_MEMORY_SIZE (0x1000 * 4)

static unsigned char *ept_memory; // 扩展页表内存指针

// 客户机的寄存器结构体

struct guest_regs {

u64 rax;

u64 rcx;

u64 rdx;

u64 rbx;

u64 rbp;

u64 rsp;

u64 rsi;

u64 rdi;

u64 r8;

u64 r9;

u64 r10;

u64 r11;

u64 r12;

u64 r13;

u64 r14;

u64 r15;

};

static u64 shutdown_rsp;

static u64 shutdown_rbp;

模块初始化

查看static int peach_init(void),该函数初始化了Peach VM内核模块,完成了字符设备的注册,属于内核模块初始化的常规流程:

static int peach_init(void)

{

printk("PEACH INIT\n");

peach_dev = MKDEV(PEACH_MAJOR, PEACH_MINOR);

if (0 < register_chrdev_region(peach_dev, PEACH_COUNT, "peach")) {

printk("register_chrdev_region error\n");

goto err0;

}

cdev_init(&peach_cdev, &peach_fops);

peach_cdev.owner = THIS_MODULE;

if (0 < cdev_add(&peach_cdev, peach_dev, 1)) {

printk("cdev_add error\n");

goto err1;

}

return 0;

err1:

unregister_chrdev_region(peach_dev, 1);

err0:

return -1;

}

ioctl - PROBE

printk("PEACH PROBE\n");

ecx = 0x480;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_BASIC = 0x%08x%08x\n", edx, eax);

ecx = 0x486;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_CR0_FIXED0 = 0x%08x%08x\n", edx, eax);

ecx = 0x487;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_CR0_FIXED1 = 0x%08x%08x\n", edx, eax);

ecx = 0x488;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_CR4_FIXED0 = 0x%08x%08x\n", edx, eax);

ecx = 0x489;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_CR4_FIXED1 = 0x%08x%08x\n", edx, eax);

ecx = 0x48D;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_TRUE_PINBASED_CTLS = 0x%08x%08x\n", edx, eax);

ecx = 0x48E;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_TRUE_PROCBASED_CTLS = 0x%08x%08x\n", edx, eax);

ecx = 0x48B;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_PROCBASED_CTLS2 = 0x%08x%08x\n", edx, eax);

ecx = 0x48F;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_TRUE_EXIT_CTLS = 0x%08x%08x\n", edx, eax);

ecx = 0x490;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_TRUE_ENTRY_CTLS = 0x%08x%08x\n", edx, eax);

ecx = 0x48C;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

ecx = 0x48C;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

printk("IA32_VMX_EPT_VPID_CAP = 0x%08x%08x\n", edx, eax);

该接口主要完成一系列的rdmsr命令,将读取到的内容使用printk输出。rdmsr命令在前文介绍过:

...这条指令使用eax,edx,ecx作为参数,ecx用于保存MSR寄存器相关值的索引,而edx,eax分别保存结果的高32位和低32位...

读出来的这些值可以用于判断当前平台是否能够使用VMX技术进行虚拟化,显然Peach VM并没有做判断,只是简单打印了一下:

[ 62.894908] PEACH PROBE

[ 62.894930] IA32_VMX_BASIC = 0x00d8100000000001

[ 62.894934] IA32_VMX_CR0_FIXED0 = 0x0000000080000021

[ 62.894937] IA32_VMX_CR0_FIXED1 = 0x00000000ffffffff

[ 62.894940] IA32_VMX_CR4_FIXED0 = 0x0000000000002000

[ 62.894943] IA32_VMX_CR4_FIXED1 = 0x0000000000772fff

[ 62.894945] IA32_VMX_TRUE_PINBASED_CTLS = 0x0000003f00000016

[ 62.894948] IA32_VMX_TRUE_PROCBASED_CTLS = 0xfff9fffe04006172

[ 62.894951] IA32_VMX_PROCBASED_CTLS2 = 0x00553cfe00000000

[ 62.894954] IA32_VMX_TRUE_EXIT_CTLS = 0x003fffff00036dfb

[ 62.894957] IA32_VMX_TRUE_ENTRY_CTLS = 0x0000f3ff000011fb

[ 62.894962] IA32_VMX_EPT_VPID_CAP = 0x00000f0106714141

ioctl - PEACH_RUN

首先通过kmalloc拿一块内存作为GuestOS的运行内存,大小为16个页(绰绰有余):

guest_memory = (u8 *) kmalloc(GUEST_MEMORY_SIZE,

GFP_KERNEL);

guest_memory_pa = __pa(guest_memory);

之所以已经有了guest_memory还要通过__pa宏计算guest_memory_pa是因为EPT的目的是帮助GPA直通HPA,所以要保证写进EPT页表表项的每个值都来自HPA。但是程序中的读写操作依然用的是HVA的指针的值(即:guest_memory)。往下涉及到的所有xx和xx_pa基本上都是这么一个关系。

从Guest运行内存的起始处写入GuestOS的镜像,由于是一个测试用的mini OS,不考虑使用Loader等方式,直接写内存里就完事了:

for (i = 0; i < guest_bin_len; i++) {

guest_memory[i] = guest_bin[i];

}

调用init_ept()初始化EPT各级页表,传入全局变量ept_pointer的引用和刚刚计算出的guest_memory_pa:

init_ept(&ept_pointer, guest_memory_pa);

init_ept

再次使用kmalloc拿到一块内存,用于存放EPT页表本身:

ept_memory = (u8 *) kmalloc(EPT_MEMORY_SIZE, GFP_KERNEL);

memset(ept_memory, 0, EPT_MEMORY_SIZE);

ept_va = (u64) ept_memory;

ept_pa = __pa(ept_memory);

初始化EPTP:

init_ept_pointer(ept_pointer, ept_pa);

static void init_ept_pointer(u64 *p, u64 pa)

{

*p = pa | 1 << 6 | 3 << 3 | 6;

return;

}

可以看到初始化EPTP就是把ept_pa指针低位做一些处理后写入全局变量ept_pointer中,这些位的含义可以参考:

查表可知:1<<6是访问许可,3<<3是EPE page-walk length,6表示Write-back

往下初始化各级页表表项,每个表的大小都是4K,并且在连续内存上分布

下面代码中的entry都是一个临时变量,作为各级页表的入口点

设置PML4表首个表项:

/* 将entry设置为PML4表入口 */

entry = (u64 *) ept_va;

/* 为PML4表添加一个EPT page-directory pointer表项 */

init_pml4e(entry, ept_pa + 0x1000);

printk("pml4e = 0x%llx\n", *entry);

设置EPT page-directory pointer表首个表项:

/* 将entry设置为EPT page-directory pointer表入口 */

entry = (u64 *) (ept_va + 0x1000);

/* 为EPT page-directory pointer表添加一个EPT page-directory表项 */

init_pdpte(entry, ept_pa + 0x2000);

printk("pdpte = 0x%llx\n", *entry);

设置EPT page-directory表首个表项:

/* 将entry设置为EPT page-directory表入口 */

entry = (u64 *) (ept_va + 0x2000);

/* 为EPT page-directory表添加一个EPT Page表项 */

init_pde(entry, ept_pa + 0x3000);

printk("pdte = 0x%llx\n", *entry);

设置EPT Page表前16个Page,并分别指向guest_memory_pa + 页大小*n的位置:

/* 遍历EPT Page表前16个表项设置Page地址 */

for (i = 0; i < 16; i++) {

entry = (u64 *) (ept_va + 0x3000 + i * 8); // 将entry设置为每个表项的入口

init_pte(entry, guest_memory_pa + i * 0x1000); // 设置EPT Page表项

printk("pte = 0x%llx\n", *entry);

}

init_ept 函数结束

接下来是一个小重点,初始化VMXON Region和本客户机实例对应的VMCS Region:

vmxon = (struct vmcs *) kmalloc(4096, GFP_KERNEL);

memset(vmxon, 0, 4096);

vmxon->hdr.revision_id = 0x00000001;

vmxon->hdr.shadow = 0x00000000;

vmxon_pa = __pa(vmxon);

vmcs = (struct vmcs *) kmalloc(4096, GFP_KERNEL);

memset(vmcs, 0, 4096);

vmcs->hdr.revision_id = 0x00000001;

vmcs->hdr.shadow = 0x00000000;

vmcs_pa = __pa(vmcs);

依然是前面提到过的,vmxon在虚拟机启动虚拟化之后将一直存在,而vmcs则与单个客户机实例绑定,这里之所以放在一起初始化是因为实例较为简单,并且并不打算支持多实例,所以干脆耦合着。

接下来,从Host CR4中取出第13位放入CF中并将该位设为1,再更新回cr4,这一步的目的是打开CR4寄存器中的虚拟化开关:

asm volatile (

"movq %cr4, %rax\n\t"

"bts $13, %rax\n\t"

"movq %rax, %cr4"

);

vmxon指令通过传入VMXON Region的“物理地址”作为操作数,表示进入VMX操作模式,setna指令借助EFLAGS.CF的值判断执行是否成功:

asm volatile (

"vmxon %[pa]\n\t"

"setna %[ret]"

: [ret] "=rm" (ret1)

: [pa] "m" (vmxon_pa)

: "cc", "memory"

);

这里可以留意一下,VMX的虚拟化开启需要打开两个“开关”,一个是Host CR4寄存器的第13位,一个是vmxon指令

顺便补充一点关于GCC内联汇编的概念:在clobbered list(第三行冒号)中加入cc和memory会告诉编译器内联汇编会修改cc(状态寄存器标志位)和memory(内存)中的值,于是编译器不会再假设这段内联汇编后对应的值依然是合法的

在开始设置VMCS Region之前,先用vmclear清空即将使用的VMCS中的字段:

asm volatile (

"vmclear %[pa]\n\t"

"setna %[ret]"

: [ret] "=rm" (ret1)

: [pa] "m" (vmcs_pa)

: "cc", "memory"

);

加载一个VMCS结构体指针作为当前操作对象:

asm volatile (

"vmptrld %[pa]\n\t"

"setna %[ret]"

: [ret] "=rm" (ret1)

: [pa] "m" (vmcs_pa)

: "cc", "memory"

);

VMCS被加载到逻辑CPU上后,处理器并没法通过普通的内存访问指令去访问它, 如果那样做的话,会引起“处理器报错”,唯一可用的方法就是通过vmread和vmwrite指令去访问。可以理解为逻辑CPU为当前正在使用的VMCS对象添加了一层“访问保护”。

恶心的阶段开始了!

接下来就是vmread和vmwrite的主场——为了规范对当前实例的VMCS Region的访问,intel提供了vmwrite,vmread指令。这两个指令接受两个操作数,第一个操作数表示字段索引(不是偏移),第二个操作数表示要写入的值或者要保存值的寄存器。

由于Peach VM中所有的索引值都用的16进制常数,所以这里先把访问VMCS对应字段所需常量的宏定义放出来:

enum vmcs_field {

VIRTUAL_PROCESSOR_ID = 0x00000000,

GUEST_ES_SELECTOR = 0x00000800,

GUEST_CS_SELECTOR = 0x00000802,

GUEST_SS_SELECTOR = 0x00000804,

GUEST_DS_SELECTOR = 0x00000806,

GUEST_FS_SELECTOR = 0x00000808,

GUEST_GS_SELECTOR = 0x0000080a,

GUEST_LDTR_SELECTOR = 0x0000080c,

GUEST_TR_SELECTOR = 0x0000080e,

HOST_ES_SELECTOR = 0x00000c00,

HOST_CS_SELECTOR = 0x00000c02,

HOST_SS_SELECTOR = 0x00000c04,

HOST_DS_SELECTOR = 0x00000c06,

HOST_FS_SELECTOR = 0x00000c08,

HOST_GS_SELECTOR = 0x00000c0a,

HOST_TR_SELECTOR = 0x00000c0c,

IO_BITMAP_A = 0x00002000,

IO_BITMAP_A_HIGH = 0x00002001,

IO_BITMAP_B = 0x00002002,

IO_BITMAP_B_HIGH = 0x00002003,

MSR_BITMAP = 0x00002004,

MSR_BITMAP_HIGH = 0x00002005,

VM_EXIT_MSR_STORE_ADDR = 0x00002006,

VM_EXIT_MSR_STORE_ADDR_HIGH = 0x00002007,

VM_EXIT_MSR_LOAD_ADDR = 0x00002008,

VM_EXIT_MSR_LOAD_ADDR_HIGH = 0x00002009,

VM_ENTRY_MSR_LOAD_ADDR = 0x0000200a,

VM_ENTRY_MSR_LOAD_ADDR_HIGH = 0x0000200b,

TSC_OFFSET = 0x00002010,

TSC_OFFSET_HIGH = 0x00002011,

VIRTUAL_APIC_PAGE_ADDR = 0x00002012,

VIRTUAL_APIC_PAGE_ADDR_HIGH = 0x00002013,

APIC_ACCESS_ADDR = 0x00002014,

APIC_ACCESS_ADDR_HIGH = 0x00002015,

EPT_POINTER = 0x0000201a,

EPT_POINTER_HIGH = 0x0000201b,

GUEST_PHYSICAL_ADDRESS = 0x00002400,

GUEST_PHYSICAL_ADDRESS_HIGH = 0x00002401,

VMCS_LINK_POINTER = 0x00002800,

VMCS_LINK_POINTER_HIGH = 0x00002801,

GUEST_IA32_DEBUGCTL = 0x00002802,

GUEST_IA32_DEBUGCTL_HIGH = 0x00002803,

GUEST_IA32_PAT = 0x00002804,

GUEST_IA32_PAT_HIGH = 0x00002805,

GUEST_IA32_EFER = 0x00002806,

GUEST_IA32_EFER_HIGH = 0x00002807,

GUEST_IA32_PERF_GLOBAL_CTRL = 0x00002808,

GUEST_IA32_PERF_GLOBAL_CTRL_HIGH= 0x00002809,

GUEST_PDPTR0 = 0x0000280a,

GUEST_PDPTR0_HIGH = 0x0000280b,

GUEST_PDPTR1 = 0x0000280c,

GUEST_PDPTR1_HIGH = 0x0000280d,

GUEST_PDPTR2 = 0x0000280e,

GUEST_PDPTR2_HIGH = 0x0000280f,

GUEST_PDPTR3 = 0x00002810,

GUEST_PDPTR3_HIGH = 0x00002811,

HOST_IA32_PAT = 0x00002c00,

HOST_IA32_PAT_HIGH = 0x00002c01,

HOST_IA32_EFER = 0x00002c02,

HOST_IA32_EFER_HIGH = 0x00002c03,

HOST_IA32_PERF_GLOBAL_CTRL = 0x00002c04,

HOST_IA32_PERF_GLOBAL_CTRL_HIGH = 0x00002c05,

PIN_BASED_VM_EXEC_CONTROL = 0x00004000,

CPU_BASED_VM_EXEC_CONTROL = 0x00004002,

EXCEPTION_BITMAP = 0x00004004,

PAGE_FAULT_ERROR_CODE_MASK = 0x00004006,

PAGE_FAULT_ERROR_CODE_MATCH = 0x00004008,

CR3_TARGET_COUNT = 0x0000400a,

VM_EXIT_CONTROLS = 0x0000400c,

VM_EXIT_MSR_STORE_COUNT = 0x0000400e,

VM_EXIT_MSR_LOAD_COUNT = 0x00004010,

VM_ENTRY_CONTROLS = 0x00004012,

VM_ENTRY_MSR_LOAD_COUNT = 0x00004014,

VM_ENTRY_INTR_INFO_FIELD = 0x00004016,

VM_ENTRY_EXCEPTION_ERROR_CODE = 0x00004018,

VM_ENTRY_INSTRUCTION_LEN = 0x0000401a,

TPR_THRESHOLD = 0x0000401c,

SECONDARY_VM_EXEC_CONTROL = 0x0000401e,

PLE_GAP = 0x00004020,

PLE_WINDOW = 0x00004022,

VM_INSTRUCTION_ERROR = 0x00004400,

VM_EXIT_REASON = 0x00004402,

VM_EXIT_INTR_INFO = 0x00004404,

VM_EXIT_INTR_ERROR_CODE = 0x00004406,

IDT_VECTORING_INFO_FIELD = 0x00004408,

IDT_VECTORING_ERROR_CODE = 0x0000440a,

VM_EXIT_INSTRUCTION_LEN = 0x0000440c,

VMX_INSTRUCTION_INFO = 0x0000440e,

GUEST_ES_LIMIT = 0x00004800,

GUEST_CS_LIMIT = 0x00004802,

GUEST_SS_LIMIT = 0x00004804,

GUEST_DS_LIMIT = 0x00004806,

GUEST_FS_LIMIT = 0x00004808,

GUEST_GS_LIMIT = 0x0000480a,

GUEST_LDTR_LIMIT = 0x0000480c,

GUEST_TR_LIMIT = 0x0000480e,

GUEST_GDTR_LIMIT = 0x00004810,

GUEST_IDTR_LIMIT = 0x00004812,

GUEST_ES_AR_BYTES = 0x00004814,

GUEST_CS_AR_BYTES = 0x00004816,

GUEST_SS_AR_BYTES = 0x00004818,

GUEST_DS_AR_BYTES = 0x0000481a,

GUEST_FS_AR_BYTES = 0x0000481c,

GUEST_GS_AR_BYTES = 0x0000481e,

GUEST_LDTR_AR_BYTES = 0x00004820,

GUEST_TR_AR_BYTES = 0x00004822,

GUEST_INTERRUPTIBILITY_INFO = 0x00004824,

GUEST_ACTIVITY_STATE = 0X00004826,

GUEST_SYSENTER_CS = 0x0000482A,

HOST_IA32_SYSENTER_CS = 0x00004c00,

CR0_GUEST_HOST_MASK = 0x00006000,

CR4_GUEST_HOST_MASK = 0x00006002,

CR0_READ_SHADOW = 0x00006004,

CR4_READ_SHADOW = 0x00006006,

CR3_TARGET_VALUE0 = 0x00006008,

CR3_TARGET_VALUE1 = 0x0000600a,

CR3_TARGET_VALUE2 = 0x0000600c,

CR3_TARGET_VALUE3 = 0x0000600e,

EXIT_QUALIFICATION = 0x00006400,

GUEST_LINEAR_ADDRESS = 0x0000640a,

GUEST_CR0 = 0x00006800,

GUEST_CR3 = 0x00006802,

GUEST_CR4 = 0x00006804,

GUEST_ES_BASE = 0x00006806,

GUEST_CS_BASE = 0x00006808,

GUEST_SS_BASE = 0x0000680a,

GUEST_DS_BASE = 0x0000680c,

GUEST_FS_BASE = 0x0000680e,

GUEST_GS_BASE = 0x00006810,

GUEST_LDTR_BASE = 0x00006812,

GUEST_TR_BASE = 0x00006814,

GUEST_GDTR_BASE = 0x00006816,

GUEST_IDTR_BASE = 0x00006818,

GUEST_DR7 = 0x0000681a,

GUEST_RSP = 0x0000681c,

GUEST_RIP = 0x0000681e,

GUEST_RFLAGS = 0x00006820,

GUEST_PENDING_DBG_EXCEPTIONS = 0x00006822,

GUEST_SYSENTER_ESP = 0x00006824,

GUEST_SYSENTER_EIP = 0x00006826,

HOST_CR0 = 0x00006c00,

HOST_CR3 = 0x00006c02,

HOST_CR4 = 0x00006c04,

HOST_FS_BASE = 0x00006c06,

HOST_GS_BASE = 0x00006c08,

HOST_TR_BASE = 0x00006c0a,

HOST_GDTR_BASE = 0x00006c0c,

HOST_IDTR_BASE = 0x00006c0e,

HOST_IA32_SYSENTER_ESP = 0x00006c10,

HOST_IA32_SYSENTER_EIP = 0x00006c12,

HOST_RSP = 0x00006c14,

HOST_RIP = 0x00006c16,

};

我猜你可能以及记不清VMCS里面都有哪些字段了,所以再次祭出这张图:

再留意一个点,vmread/vmwrite对CS,SS,GS等段寄存器都不是采取整个索引的策略,也就是说,你不必浪费精力一次性构造整个段寄存器的值再更新,只需要索引到其中的XX->Selector,XX->BaseAddress,XX->SegmentLimit,XX->AccessRight等字段单独修改即可。好处是灵活性增加了,坏处是比较繁琐。

下面开始初始化GUEST STATE AREA的部分段寄存器,RIP寄存器和EFLAGS寄存器:

vmcs_field = 0x00000802; // guest cs段选择子值

vmcs_field_value = 0x0000;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Guest CS selctor = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x0000080E; // guest tr段选择子值

vmcs_field_value = 0x0000;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Guest TR selctor = 0x%llx\n", vmcs_field_value);

// --------------------省略一大坨-------------------------

vmcs_field = 0x00006800; // 设置guest CR0寄存器

vmcs_field_value = 0x00000020;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Guest CR0 = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x00006804; // 设置guest CR4寄存器

vmcs_field_value = 0x0000000000002000;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Guest CR4 = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x00006808; // 设置guest cs段基址

vmcs_field_value = 0x0000000000000000;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Guest CS base = 0x%llx\n", vmcs_field_value);

// --------------------省略一大坨-------------------------

vmcs_field = 0x0000681E; // 设置guest RIP寄存器(GuestOS执行流起始点!)

vmcs_field_value = 0x0000000000000000;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Guest RIP = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x00006820; // 设置guest RFLAGS寄存器

vmcs_field_value = 0x0000000000000002;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Guest RFLAGS = 0x%llx\n", vmcs_field_value);

省去了大同小异的部分,关注一下索引为0x0000681E的部分,这里写的是GuestOS的执行起点。Peach VM里面写了0x0000000000000000,因为之前的mini OS镜像直接写入到运存的起始位置了。

然后初始化HOST STATE AREA的部分段寄存器:

vmcs_field = 0x00000C00; // 设置host es段选择子

asm volatile (

"movq %%es, %0\n\t" // 取出host当前es值(这里是整个取出)

: "=a" (vmcs_field_value)

:

);

vmcs_field_value &= 0xF8; // 做与运算取出低位的段选择子部分

asm volatile (

"vmwrite %1, %0\n\t" // 把段选择子设置到vmcs的host_state_area->ES_SELECTOR中

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Host ES selctor = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x00000C02; // 设置host cs段选择子

asm volatile (

"movq %%cs, %0\n\t"

: "=a" (vmcs_field_value)

:

);

vmcs_field_value &= 0xF8;

asm volatile (

"vmwrite %1, %0\n\t" // 设置到vmcs的host_state_area->CS_SELECTOR中

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Host CS selctor = 0x%llx\n", vmcs_field_value);

// --------------------省略一大坨-------------------------

vmcs_field = 0x00002C00; // 设置host IA32_PAT

ecx = 0x277;

asm volatile (

"rdmsr\n\t" // 该值位于msr寄存器中,所以要先从msr寄存器给读出来(下同)

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

rdx = edx;

vmcs_field_value = rdx << 32 | eax;

asm volatile (

"vmwrite %1, %0\n\t" // 设置到vmcs的host_state_area->IA32_PAT中

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Host IA32_PAT = 0x%llx\n", vmcs_field_value);

// --------------------省略一大坨-------------------------

vmcs_field = 0x00006C00; // 设置host CR0

asm volatile (

"movq %%cr0, %0\n\t"

: "=a" (vmcs_field_value)

:

);

asm volatile (

"vmwrite %1, %0\n\t" // 设置到vmcs的host_state_area->CR0中

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Host CR0 = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x00006C02; // 设置host CR3

asm volatile (

"movq %%cr3, %0\n\t"

: "=a" (vmcs_field_value)

:

);

asm volatile (

"vmwrite %1, %0\n\t" // 设置到vmcs的host_state_area->CR3中

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Host CR3 = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x00006C04; // 设置host CR4

asm volatile (

"movq %%cr4, %0\n\t"

: "=a" (vmcs_field_value)

:

);

asm volatile (

"vmwrite %1, %0\n\t" // 设置到vmcs的host_state_area->CR4中

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Host CR4 = 0x%llx\n", vmcs_field_value);

// --------------------省略一大坨-------------------------

vmcs_field = 0x00006C0C; // host GDTR_BASE

asm volatile (

"sgdt %0\n\t"

: "=m" (xdtr)

:

);

vmcs_field_value = *((u64 *) (xdtr + 2)); // 取得GDT_BASE部分的值

asm volatile (

"vmwrite %1, %0\n\t" // 设置到vmcs的host_state_area->GDTR_BASE中

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Host GDTR base = 0x%llx\n", vmcs_field_value);

// --------------------省略一大坨-------------------------

下面的设置的IA32_SYSENTER_EIP用于标识用户进行快速系统调用时,直接跳转到的ring0代码段的地址。SYSENTER进行的系统调用可以避免普通中断产生的较大开销。

vmcs_field = 0x00006C12; // host IA32_SYSENTER_EIP

ecx = 0x176;

asm volatile (

"rdmsr\n\t"

: "=a" (eax), "=d" (edx)

: "c" (ecx)

);

rdx = edx;

vmcs_field_value = rdx << 32 | eax;

asm volatile (

"vmwrite %1, %0\n\t" // 设置到vmcs的host_state_area->IA32_SYSENTER_EIP中

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Host IA32_SYSENTER_EIP = 0x%llx\n", vmcs_field_value);

来到一个关键点,下面的两步设置了HOST STATE AREA中的RSP和RIP:

stack = (u8 *) kmalloc(0x8000, GFP_KERNEL); // 通过kmalloc为host RSP指向的栈分配了空间

vmcs_field = 0x00006C14; // 设置host RSP寄存器值

vmcs_field_value = (u64) stack + 0x8000;

asm volatile (

"vmwrite %1, %0\n\t" // 设置到vmcs的host_state_area->RSP中

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Host RSP = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x00006C16; // 设置host RIP寄存器值

vmcs_field_value = (u64) _vmexit_handler; // 这里设置了从虚拟机中退出时要跳转到的地址

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Host RIP = 0x%llx\n", vmcs_field_value);

之前说过,因为客户机和VMM之间会通过VM Exit和VM Entry发生频繁的切换,所以VMCS就承担起了记录Host和Guest上下文的责任。这里设置的Host RIP和Host RSP就是在客户机通过VM Exit返回到VMM时自动设置的RSP和RIP值。RSP的值被设置为了stack + 0x8000,这是一段kmalloc开辟出来的栈空间,因为返回到VMM时不可能再去复用内核模块此时的RSP,所以单独开辟一个栈空间显然是最合理的选择,同时也便于多个实例情况下的处理。而RIP被设置成了_vmexit_handler函数的地址,顾名思义这是专门用来处理VM Exit的一个函数。该函数的实现在vmexit_handler.S中:

.code64

.globl _vmexit_handler

.type _vmexit_handler, @function

_vmexit_handler:

pushq %r15

pushq %r14

pushq %r13

pushq %r12

pushq %r11

pushq %r10

pushq %r9

pushq %r8

pushq %rdi

pushq %rsi

pushq %rbp

pushq %rbx

pushq %rdx

pushq %rcx

pushq %rax

movq %rsp, %rdi

callq handle_vmexit

popq %rax

popq %rcx

popq %rdx

popq %rbx

popq %rbp

popq %rsi

popq %rdi

popq %r8

popq %r9

popq %r10

popq %r11

popq %r12

popq %r13

popq %r14

popq %r15

vmresume

ret

可以发现,该函数主要的任务是:保存上下文 -> 调用handle_vmexit(rsp) -> 恢复上下文 -> vmresume 重启客户机 -> ret返回。这个函数开始一定要保存所有的寄存器,并在返回虚拟机之前恢复所有的寄存器。否则退出虚拟机之前寄存器中的内容和返回虚拟机之后寄存器中的内容不一样的话一定会导致不可预知的结果。因此这个函数一定得是汇编写的裸函数。

这里暂且把handle_vmexit的内容放一放,先看完客户机的完整创建过程再回过头来看handle_vmexit会更顺理成章。

往下设置vCPU的ID:

vmcs_field = 0x00000000; // 设置VIRTUAL_PROCESSOR_ID值

vmcs_field_value = 0x0001; // 常量1

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("VPID = 0x%llx\n", vmcs_field_value);

由于只有一个vCPU,直接写1就行

将之前辛辛苦苦准备的EPT表的ept_pointer的物理地址(PA)写进VMCS Region中:

注意ept_pointer指针指向一个保存了EPT表地址的内存位置(而不是直接指向EPT表)

vmcs_field = 0x0000201A; // 设置EPT_POINTER的值

vmcs_field_value = ept_pointer;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("EPT_POINTER = 0x%llx\n", vmcs_field_value);

通过设置PIN_BASED_VM_EXEC_CONTROL控制pin与INTR和NMI是否产生VM-Exit:

vmcs_field = 0x00004000; // 设置PIN_BASED_VM_EXEC_CONTROL的值

vmcs_field_value = 0x00000016;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Pin-based VM-execution controls = 0x%llx\n", vmcs_field_value);

设置CPU_BASED_VM_EXEC_CONTROL,SECONDARY_VM_EXEC_CONTROL:

vmcs_field = 0x00004002; // 设置CPU_BASED_VM_EXEC_CONTROL的值

vmcs_field_value = 0x840061F2;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Primary Processor-based VM-execution controls = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x0000401E; // 设置SECONDARY_VM_EXEC_CONTROL的值

vmcs_field_value = 0x000000A2;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Secondary Processor-based VM-execution controls = 0x%llx\n", vmcs_field_value);

这两个字段同样是启用或禁用一些重要功能,对于Peach VM而言,最主要的是使GuestOS在执行HLT指令时会发生VM Exit,这是README.md里特别强调的。

下表是CPU_BASED_VM_EXEC_CONTROL各个位的意义,大部分都是中断虚拟化相关的东西:

接下来设置VM_ENTRY_CONTROLS和VM_EXIT_CONTROLS的值:

vmcs_field = 0x00004012; // 设置VM_ENTRY_CONTROLS的值

vmcs_field_value = 0x000011fb;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("VM-entry controls = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x0000400C; // 设置VM_EXIT_CONTROLS的值

vmcs_field_value = 0x00036ffb;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("VM-exit controls = 0x%llx\n", vmcs_field_value);

这两者正好相反,一个是控制VM Entry时的行为,一个是控制VM Exit时的行为。下表分别是VM_ENTRY_CONTROLS和VM_EXIT_CONTROLS各个位的意义。例如通过查表可得,VM_ENTRY_CONTROLS设置为:

顺带一提,不用宏赋值真的有点无语,查表都难查

在正式启动客户机前,把当前的RSP和RBP保存下来:

asm volatile (

"movq %%rsp, %0\n\t"

"movq %%rbp, %1\n\t"

: "=a" (shutdown_rsp), "=b" (shutdown_rbp)

:

);

这是因为在GuestOS发生HLT时handle_vmexit会跳转回该函数的尾部,借助函数尾部的流程关闭客户机,结束VMX操作模式。只有把栈给恢复了才能确保函数正常退出。虽然我不确定Peach VM这种奇怪的控制流是不是很容易出问题...感觉稍微设计一下就是一道绝佳的CTF题。

经历了千辛万苦地前期准备,终于到了启动客户机的时候,实际上只需要一条vmlaunch就可以进入GuestOS:

asm volatile (

"vmlaunch\r\n"

"setna %[ret]"

: [ret] "=rm" (ret1)

:

: "cc", "memory"

);

printk("vmlaunch = %d\n", ret1);

在这条指令后需要通过VMM判断vmlunch的返回结果,以确定vCPU是否真正被执行,还是因为某些逻辑冲突导致vCPU没有被执行就返回。只需要通过vmread读出VMCS中的VM_EXIT_REASON值即可:

vmcs_field = 0x00004402;

asm volatile (

"vmread %1, %0\n\t" // 读取VMCS中VM_EXIT_REASON域的值

: "=r" (vmcs_field_value)

: "r" (vmcs_field)

);

printk("EXIT_REASON = 0x%llx\n", vmcs_field_value);

繁华落幕,往下就是虚拟机的关闭流程了。

先通过内联汇编添加一个shutdown标签:

asm volatile ("shutdown:");

printk("********** guest shutdown **********\n");

这么做的原因前面已经提到,handle_vmexit遇到HLT指令最后会跳回这里,这样才能将执行流正常从peach_ioctl返回到用户态部分。

/* 关闭VMX操作模式 */

asm volatile ("vmxoff");

/* 设置cr4中第13位以关闭虚拟化开关 */

asm volatile (

"movq %cr4, %rax\n\t"

"btr $13, %rax\n\t"

"movq %rax, %cr4"

);

虚拟机的关闭和开启相互对应,同样是两个步骤,先使用vmxoff关闭VMX操作模式,再设置Host CR4中的第13位关闭虚拟化开关。

最后的最后来看看之前被我们暂时搁置handle_vmexit函数。

handle_vmexit

之前已经说过,每次VM Exit都会进入该函数,所以为了调试方便可以把客户机寄存器信息给打印一下:

dump_guest_regs(regs);

首先用vmread读出EXIT_REASON:

vmcs_field = 0x00004402;

asm volatile (

"vmread %1, %0\n\t"

: "=r" (vmcs_field_value)

: "r" (vmcs_field)

);

printk("EXIT_REASON = 0x%llx\n", vmcs_field_value);

从读出的EXIT_REASON进入不同的处理逻辑,比如用户可以自定义对于某些PMIO,MMIO以及xx中断的处理逻辑。但是Peach VM只象征性的实现了CPUID和HLT的处理:

switch (vmcs_field_value) {

case 0x0C: // EXIT_REASON_HLT

/*

恢复先前保存的launch前的rsp和rbp指针,然后

跳转执行流到预先定义好的shutdown LABLE处

*/

asm volatile (

"movq %0, %%rsp\n\t"

"movq %1, %%rbp\n\t"

"jmp shutdown\n\t"

:

: "a" (shutdown_rsp), "b" (shutdown_rbp)

);

break;

case 0x0A: // EXIT_REASON_CPUID

/* 遇到取cpuid时直接手动去设置寄存器值 */

regs->rax = 0x6368;

regs->rbx = 0x6561;

regs->rcx = 0x70;

break;

default:

break;

}

- 遇到

EXIT_REASON_HLT时,恢复先前保存的peach_ioctl的栈寄存器,跳转到shutdown标签,完成虚拟机的关闭和ioctl的返回 - 遇到

EXIT_REASON_CPUID时直接设置客户机中的寄存器值

顺便补充一下各种EXIT_REASON的宏定义:

#define VMX_EXIT_REASONS_FAILED_VMENTRY 0x80000000

#define VMX_EXIT_REASONS_SGX_ENCLAVE_MODE 0x08000000

#define EXIT_REASON_EXCEPTION_NMI 0

#define EXIT_REASON_EXTERNAL_INTERRUPT 1

#define EXIT_REASON_TRIPLE_FAULT 2

#define EXIT_REASON_INIT_SIGNAL 3

#define EXIT_REASON_SIPI_SIGNAL 4

#define EXIT_REASON_INTERRUPT_WINDOW 7

#define EXIT_REASON_NMI_WINDOW 8

#define EXIT_REASON_TASK_SWITCH 9

#define EXIT_REASON_CPUID 10

#define EXIT_REASON_HLT 12

#define EXIT_REASON_INVD 13

#define EXIT_REASON_INVLPG 14

#define EXIT_REASON_RDPMC 15

#define EXIT_REASON_RDTSC 16

#define EXIT_REASON_VMCALL 18

#define EXIT_REASON_VMCLEAR 19

#define EXIT_REASON_VMLAUNCH 20

#define EXIT_REASON_VMPTRLD 21

#define EXIT_REASON_VMPTRST 22

#define EXIT_REASON_VMREAD 23

#define EXIT_REASON_VMRESUME 24

#define EXIT_REASON_VMWRITE 25

#define EXIT_REASON_VMOFF 26

#define EXIT_REASON_VMON 27

#define EXIT_REASON_CR_ACCESS 28

#define EXIT_REASON_DR_ACCESS 29

#define EXIT_REASON_IO_INSTRUCTION 30

#define EXIT_REASON_MSR_READ 31

#define EXIT_REASON_MSR_WRITE 32

#define EXIT_REASON_INVALID_STATE 33

#define EXIT_REASON_MSR_LOAD_FAIL 34

#define EXIT_REASON_MWAIT_INSTRUCTION 36

#define EXIT_REASON_MONITOR_TRAP_FLAG 37

#define EXIT_REASON_MONITOR_INSTRUCTION 39

#define EXIT_REASON_PAUSE_INSTRUCTION 40

#define EXIT_REASON_MCE_DURING_VMENTRY 41

#define EXIT_REASON_TPR_BELOW_THRESHOLD 43

#define EXIT_REASON_APIC_ACCESS 44

#define EXIT_REASON_EOI_INDUCED 45

#define EXIT_REASON_GDTR_IDTR 46

#define EXIT_REASON_LDTR_TR 47

#define EXIT_REASON_EPT_VIOLATION 48

#define EXIT_REASON_EPT_MISCONFIG 49

#define EXIT_REASON_INVEPT 50

#define EXIT_REASON_RDTSCP 51

#define EXIT_REASON_PREEMPTION_TIMER 52

#define EXIT_REASON_INVVPID 53

#define EXIT_REASON_WBINVD 54

#define EXIT_REASON_XSETBV 55

#define EXIT_REASON_APIC_WRITE 56

#define EXIT_REASON_RDRAND 57

#define EXIT_REASON_INVPCID 58

#define EXIT_REASON_VMFUNC 59

#define EXIT_REASON_ENCLS 60

#define EXIT_REASON_RDSEED 61

#define EXIT_REASON_PML_FULL 62

#define EXIT_REASON_XSAVES 63

#define EXIT_REASON_XRSTORS 64

#define EXIT_REASON_UMWAIT 67

#define EXIT_REASON_TPAUSE 68

#define EXIT_REASON_BUS_LOCK 74

往下看,下面的部分主要在为vmresume做准备。每次重新进入guest VM之前都要重新设置一下Guest RIP,否则再次进入时又会碰到导致VM Exit发生的指令。VMCS提供了VM_EXIT_INSTRUCTION_LEN这个索引,该索引对应的值正好是导致客户机退出的指令的长度,Guest RIP只需要自增对应值即可跳过该指令:

vmcs_field = 0x0000681E; // 读取GUEST_RIP

asm volatile (

"vmread %1, %0\n\t"

: "=r" (vmcs_field_value)

: "r" (vmcs_field)

);

printk("Guest RIP = 0x%llx\n", vmcs_field_value);

guest_rip = vmcs_field_value;

vmcs_field = 0x0000440C; // 读取VM_EXIT_INSTRUCTION_LEN

asm volatile (

"vmread %1, %0\n\t"

: "=r" (vmcs_field_value)

: "r" (vmcs_field)

);

printk("VM-exit instruction length = 0x%llx\n", vmcs_field_value);

vmcs_field = 0x0000681E; // 设置GUEST_RIP

vmcs_field_value = guest_rip + vmcs_field_value;

asm volatile (

"vmwrite %1, %0\n\t"

:

: "r" (vmcs_field), "r" (vmcs_field_value)

);

printk("Guest RIP = 0x%llx\n", vmcs_field_value);

handle_vmexit 函数结束

总结

关于Peach VM和Intel VMX入门的分析就这么多,如果可以的话建议上手调试一下。虚拟化能研究的方向还有好多好多,比如QEMU源码的分析,KVM开发,虚拟化安全等等。如果有兴趣的话可以私聊交流,相互学习!

](https://eqqie.cn/usr/uploads/2024/03/953821026.png)